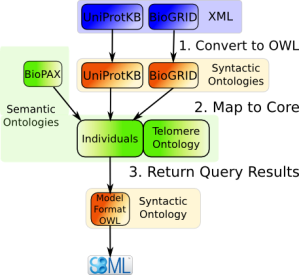

Golbreich et al describe a formal method of converting OBO to OWL

1.1 files, and vice versa. Their code has been integrated into the OWL

API, a set of classes that is well-used within the OWL community. For

instance, Protege 4 is built on the OWL API. While there have been

other efforts in the past to map between the OBO flat-file format and

OWL (they specifically mention Chris Mungall’s work on an XLST used as

a plugin within Protege that can perform the conversion), none were

done in a formal or rigorous manner. By defining an exact relationship

between OBO and OWL constructs using consensus information provided by

the OBO community, the authors have provided a more robust method of

mapping than has been available to date.

Consequently, the entire library of tools, reasoners and editors

available to the OWL community are now also available to OBO developers

in a way that does not force them to permanently leave the format and

environment that they are used to.

OBO ontologies are ontologies generated within the biological and

biomedical domain and which follow a standard, if often

non-rigorously-defined, syntax and semantics. The most well-known of

the OBO ontologies is the Gene Ontology (GO). Not only do you subscribe

to the format when you choose OBO, you are also subscribing to the

ideas behind the OBO Foundry, which aims to limit overlap of ontologies

in related fields, and which provides a communal environment (mailing

lists, websites, etc) in which to develop. OWL (the Web Ontology

Language) has three dialects, of which OWL-DL (DL stands for

Description Logics) is the most commonly used. OWL-DL is favored by

ontologists wishing to perform computational analyses over ontologies

as it has not just rigorously-defined formal semantics, but also a wide

user-base and a suite of reasoning tools developed by multiple groups.

OBO is composed of stanzas describing elements of the ontology.

Below is an example of a term in its stanza, which describes its

location in the larger ontology:

[Term]

id: GO:0001555

name: oocyte growth

is_a: GO:0016049 ! cell growth

relationship: part_of GO:0048601 ! oocyte morphogenesis

intersection_of: GO:0040007 ! growth

intersection_of: has_central_participant CL:0000023 ! oocyte

Before they could start writing the parsing and mapping programs,

they had to formalize both the semantics and the syntax of OBO. This is

not something that would normally be done by the developers of the

format, not the users of the format, but both the syntax and semantics

of OBO are only defined in natural language. These natural language

definitions often lead to imprecision and, in extreme cases, no

consensus was reached for some of the OBO constructs. However, the

diligence of the authors in getting consensus from the OBO community

should be rewarded in future by the OBO community feeling confident in

the mapping, and therefore also in using the OWL tools now available to

them. An example of natural language defintions in the OBO User Guide

follows:

This tag describes a typed relationship between this term and

another term. […] The necessary modifier allows a relationship to be

marked as “not necessarily true”. […]

Neither “necessarily true” nor relationship have been defined. You

can, in fact, computationally define a relation in three different ways

(taking their stanza example from above):

- existantially, where each instance of GO:0001555 must have at least

one part_of relationship to an instance of the term GO:0048601;

- universally, where instances of GO:0001555 can *only* be connected to instances of GO:0048601;

- via a constraint interpretation, where the endpoints of the

relationship *must* be known, but which cannot in any case be expressed

with DL, so is not useful to this dicussion.

OBO-Edit does not always infer what should be inferred if all of the

rules of its User Guide are followed. There is a good example of this

in the text.In their formal representation of the OBO syntax they used

BNF, which is backwards-compatible with OBO. Many of the mappings are

quite straightforward: OBO terms become OWL classes, OBO relationship

types become OWL properties, OBO instances become OWL individuals, OBO

ids are the URIs in OWL, and the OBO names become the OWL labels. is_a,

disjoint_from, domain and range have direct OWL equivalents. There had

to be some more complex mapping in other places, such as trying to map

OBO relationship types to either OWL object or datatype properties.

Using OWL reasoners over OBO ontologies not only works, but in the

case of the Sequence Ontology (SO), found a term that only had a single

intersection_of statement, and was thus illegal according to OBO rules,

but which hadn’t been found by OBO-Edit.

Up until now, I’ve been unsure as to how the OWL files are created

from files in the OBO format. This was a paper that was clear and to

the point. Thanks very much!

Update December 2008: I originally posted this without the BPR3 /

ResearchBlogging.org tag, as I was unsure where conference proceedings

came in the “peer-reviewed research” part of the guidelines. However,

as I’m now getting back into the whole researchblogging thing, I feel

(having read many of the posts of my fellow research bloggers) that

this would be suitable. If anyone has any opinions, I’d be most

interested!

Golbreich, C., Horridge, M., Horrocks, I., Motik, B., Shearer, R. (2008). OBO and OWL: Leveraging Semantic Web Technologies for the Life Sciences Lecture Notes in Computer Science, 4825/2008, 169-182 DOI: 10.1007/978-3-540-76298-0_13

Read and post comments |

Send to a friend

original

You must be logged in to post a comment.